Neural Network Training

Training a neural network is not much different from training logistic regression. We have to construct a computational graph first, which will allow us to apply the chain rule while propagating the the gradients from the loss function all the way to the weights and biases.

To emphasise this idea again, we are going to use an example of a neural network with two neurons in the hidden layer and a single output neuron. As usual we will assume a single training sample to avoid overcomplicated computational graphs.

Let's first zoom into the output neuron of the neural network. We disregard the loss function for the moment to keep things simple, but keep in mind, that the full graph would contain cross-entropy or the mean squared error.

We use the (L)ayer (N)euron (W)eight/(B)ias notation for weights and biases. L2 N1 W2 for example stands for weight 2 of the first neuron in the second layer of the neural network.

If you look at the above graph, you should notice, that this neuron is not different from a plain vanilla logistic regression graph. Yet instead of using the input features to calculate the output, we use the hidden features a_1 undefined and a_2 undefined . Each of the hidden features is based on a different logistic regression with its own set of weights and a bias. So when we use backpropagation we do not stop at a_1 undefined or a_2 undefined , but keep moving towards the earlier weights and biases.

The above graph only includes two hidden sigmoid neurons, but theoretically a graph can contains hundreds of layers with hundreds of neurons each. Automatic differentiation libraries will automatically construct a computational graph and calculate the gradients, no matter the size of the neural network.

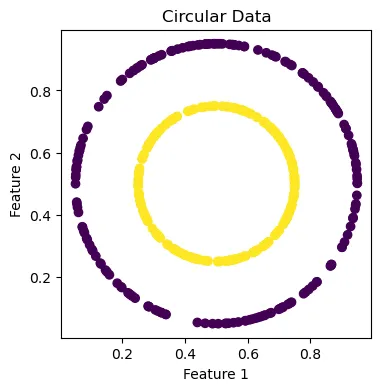

Now let's remember that our original goal is to solve a non linear problem of the below kind.

Our neural network will take the two features as input, process them through the hidden layer with four neurons and finally produce the output neuron, which contains the probability to belong to one of the two categories. This probability is used to measure the cross-entropy loss.

In the example below you can observe how the decision boundary moves when you use backpropagation. Usually 10000 steps are sufficient to find weights for a good decision boundary, this might take a couple of minutes. Try to observe how the cross-entropy and the shape of the decision boundary change over time. At a certain point you will most likely see a sharp drop in cross entropy, this is when things will start to improve significantly.

While the example above provides an intuitive introduction into the world of neural networks we need a way to formalize these calculations through mathematical notation.

As we have covered in previous chapters can calculate the value of a neuron a undefined in a two step process. In the first step we calculate the net input z undefined by multiplying the feature vector \mathbf{x} undefined with the transpose of the weight vector \mathbf{w}^T undefined and adding the bias scalar b undefined . In the second step we apply an activation function f undefined to the net input.

Info

Given that we have a features vector \mathbf{x} = \begin{bmatrix} x_1 & x_2 & x_3 & \cdots & x_m \end{bmatrix} undefined and a weight vector \mathbf{w} = \begin{bmatrix} w_1 & w_2 & w_3 & \cdots & w_m \end{bmatrix} undefined we can calculate the output of the neuron a undefined in a two step procedure:

In practice we utilize a dataset \mathbf{X} undefined consisting of many samples. . As usual \mathbf{X} undefined is an n \times m undefined matrix, where n undefined (rows) is the number of samples and m undefined (columns) is the number of input features.

Similarly we need to be able to calculate several neurons in a layer. Each neuron in a particular layer has the same set of inputs, but has its own set of weights \mathbf{w} undefined and its own bias b undefined . For convenience it makes sence to collect the weights in a matrix \mathbf{W} undefined and the biases in the vector \mathbf{b} undefined .

The weight matrix \mathbf{W} undefined is a d \times m undefined matrix, where m undefined is the number of features from the previous layer and d undefined is the number of neurons (hidden features) we want to calculate for the next layer.

\mathbf{b} undefined is a 1 \times d undefined vector of biases.

We can calculate the net input matrix \mathbf{Z} undefined and the activation matrix \mathbf{A} undefined using the exact same operations we used before.

Info

Given that we have a features matrix \mathbf{X} undefined and a weight matrix \mathbf{W} undefined we can calculate the activations matrix \mathbf{A} undefined in a two step procedure:

The result is an n \times d undefined matrix.

Usually we deal with more that a single layer. We distinguish between layers by using the superscript l undefined . The matrix \mathbf{W}^{<1>} undefined for example contains weights that are multiplied with the input features.

For the first layer we get:

For all the other layers we get:

We can represent the same idea as a deeply nested function composition.

We keep iterating over matrix multiplications and activation functions, until we reach the output layer L undefined , that is used as input into a loss function.

We can implement a neural network relatively easy using PyTorch.

import torch

import numpy as np

import matplotlib.pyplot as pltWe first create a circular dataset and plot the results.

# create circular data

def circular_data():

radii = [0.45, 0.25]

center_x = 0.5

center_y = 0.5

num_points = 200

X = []

y = []

for label, radius in enumerate(radii):

for point in range(num_points):

angle = 2 * np.pi * np.random.rand()

feature_1 = radius * np.cos(angle) + center_x

feature_2 = radius * np.sin(angle) + center_y

X.append([feature_1, feature_2])

y.append([label])

return torch.tensor(X, dtype=torch.float32), torch.tensor(y, dtype=torch.float32)X, y = circular_data()feature_1 = X.T[0]

feature_2 = X.T[1]

# plot the circle

plt.figure(figsize=(4,4))

plt.scatter(x=feature_1, y=feature_2, c=y)

plt.title("Circular Data")

plt.xlabel("Feature 1")

plt.ylabel("Feature 2")

plt.show()

We implement the logic of the neural network by creating a NeuralNetwork object. WE assume a network with two input neurons, two hidden layers with

4 and 2 neurons respectively and an output neuron.

class NeuralNetwork:

def __init__(self, X, y, shape=[2, 4, 2, 1], alpha = 0.1):

self.X = X

self.y = y

self.alpha = alpha

self.weights = []

self.biases = []

# initialize weights and matrices with random numbers

for num_features, num_neurons in zip(shape[:-1], shape[1:]):

weight_matrix = torch.randn(num_neurons, num_features, requires_grad=True)

self.weights.append(weight_matrix)

bias_vector = torch.randn(1, num_neurons, requires_grad=True)

self.biases.append(bias_vector)

def forward(self):

A = X

for W, b in zip(self.weights, self.biases):

Z = A @ W.T + b

A = torch.sigmoid(Z)

return A

def loss(self, y_hat):

loss = -(self.y * torch.log(y_hat) + (1 - self.y) * torch.log(1 - y_hat)).mean()

return loss

# update weights and biases

def step(self):

with torch.inference_mode():

for w, b in zip(self.weights, self.biases):

# gradient descent

w.data.sub_(w.grad * self.alpha)

b.data.sub_(b.grad * self.alpha)

# zero out the gradients

w.grad.zero_()

b.grad.zero_()The code is relatively self explanatory. The forward() method

multiplies the weight matrix of a layer with the features matrix from the

previous layer and add the bias vector. The loss() method

calculates the binary cross-entropy and the step() method applies

gradient descent and zeroes out the gradients.

nn = NeuralNetwork(X, y)Finally we run the forward pass, the backward pass and the gradient descent steps in a loop of 50,000 iterations.

# training loop

for i in range(50_000):

y_hat = nn.forward()

loss = nn.loss(y_hat)

if i % 10000 == 0:

print(loss.data)

loss.backward()

nn.step()tensor(0.8799) tensor(0.6817) tensor(0.0222) tensor(0.0038) tensor(0.0019)

The cross-entropy loss reduces drastically and unlike our custom implementation above, the PyTorch implementation runs only for a couple of seconds.